You made it here, that's great! In the following two sections we'll provide you with a self-assessment challenge and general information about the platform and submission requirements. Make sure to read through both before trying to submit your solution.

Parse a JSON-object provided to you via stdin, concatenate the strings in the "snippets" array with the "delimiter" between the snippets. Then calculate the sha256-hash of the string and print the result in hex as a JSON-object in the form

{"hash": <the sha256-hash>}

to stdout. For example, if the given JSON would be

{"snippets": ["a", "b", "c"], "delimiter": "-"}

you have to calculate the sha256-hash of the string "a-b-c".

Your solution should then be:

{"hash": "cbd2be7b96f770a0326948ebd158cf539fab0627e8adbddc97f7a65c6a8ae59a"}

Upload you solution to the

submission platform and check

whether it worked correctly. Make sure to thoroughly read the next section so

that your solution fulfills the submission requirements.

During the course you'll step into the shoes of a sophisticated security analyst with a machine-learning background: You'll try to detect if a given code contains a vulnerability or not. But what was a securty analyst finding a vulnerability without trying to fix it? Well, probably less work for nine credits. You'll also go this extra mile and try to repair a vulnerable function in a second step.

Why do we need a platform for all this? - Well, that's the best part. As we know which code is vulnerable and we also know what the software should do and what input will crash it before the fix, we can test all this and calculate a score for each submission. During the semester, you'll find a scoreboard showing each team's detection and repair performance. To make all this possible you'll have to upload them to this platform of course.

To get yourself started, we prepared a small test to exemplify the process, which will be very similar during the semester. We strongly encourage to proceed through as it shouldn't take long and ensures that you'll have a smooth start once the course starts.

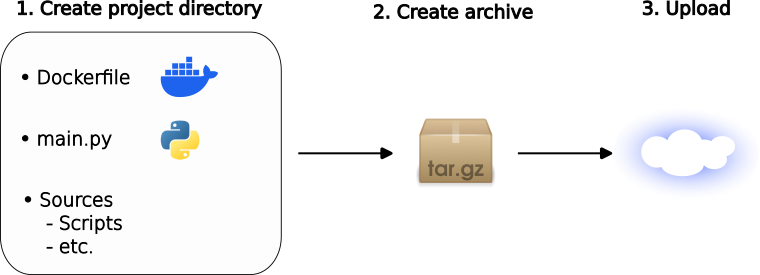

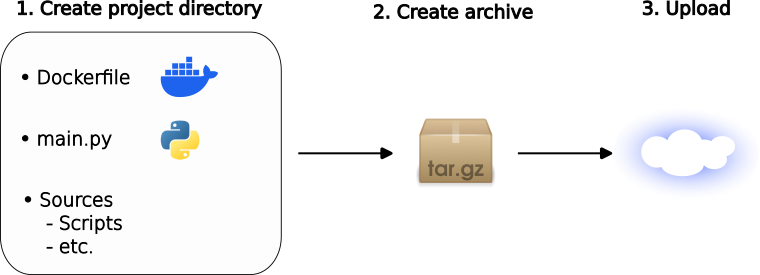

The general structure is fairly easy, as you can see in Figure 1. You just set up a directory with a certain structure (more on that in a second), archive it and upload the archive to this platform. We'll automatically unpack the archive, run your code and update the results on the scoreboard page. For the self-assessment, the result only indicates whether your archive was created correctly and the solution to the test challenge was correct. During the course, the scoreboard will probably be a bit more interesting!

So let's dive into the directory structure real quick. There are only three requirements which have to be fulfilled:

aura:assessment built from

this

Dockerfile.

submission.tar.gz ├─ Dockerfile ├─ main.py ┊

To create the .tar.gz-archive, run tar czf submission.tar.gz * from your project-directory's root. That's it, your archive is ready to be uploaded.